Descripción general de las soluciones

Uso seguro de la IA a escala empresarial

Hoy en día, la IA está en todas partes, integrada en aplicaciones populares de GenAI y SaaS, agentes y herramientas para desarrolladores. Esta rápida proliferación introduce riesgos significativos. Los firewalls tradicionales carecen de la capacidad de proteger los entornos de IA, y las soluciones puntuales emergentes no logran abordar los riesgos a escala empresarial.

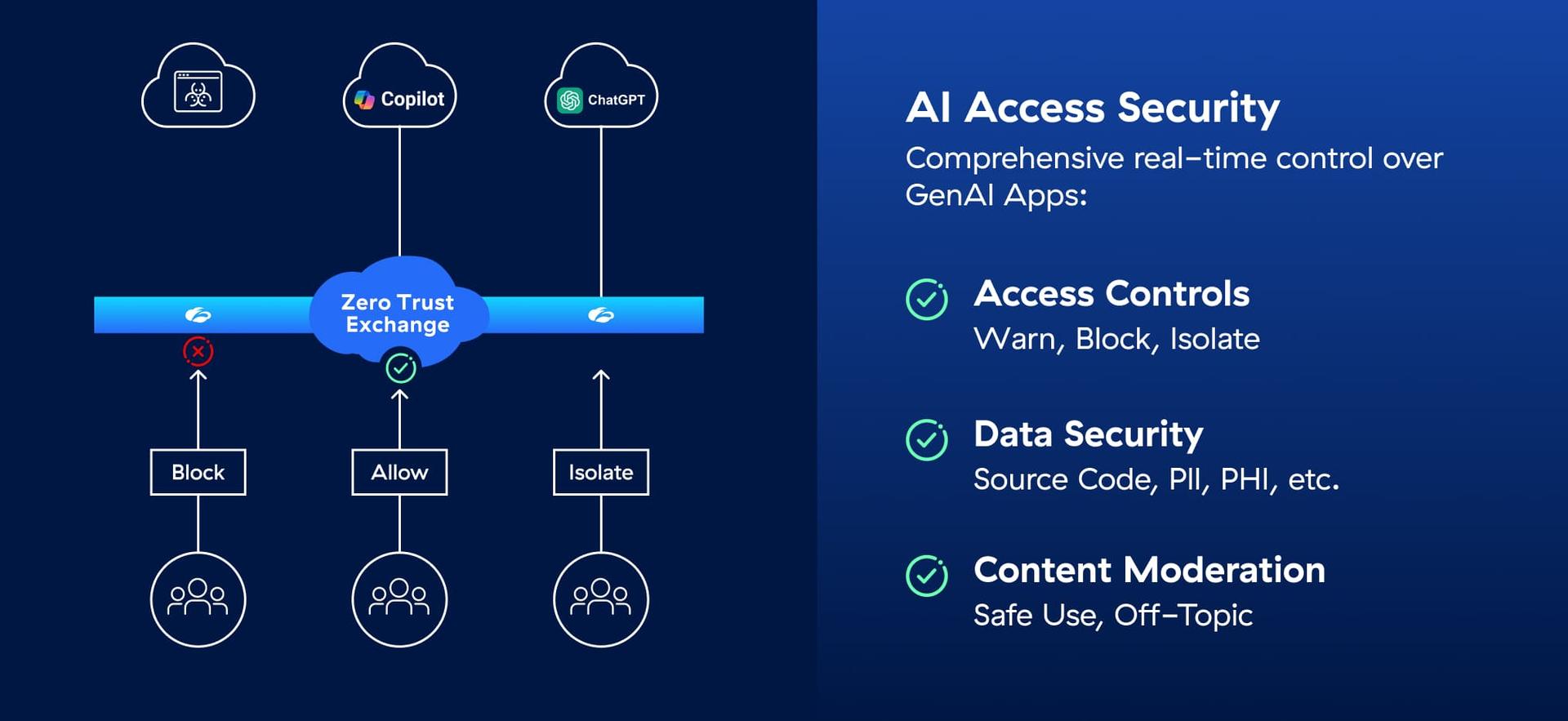

Zscaler ofrece controles de acceso Zero Trust, moderación de contenido y protecciones sólidas para ayudar a que su empresa se mantenga protegida mientras acelera la adopción de la IA.

Ventajas

Obtenga controles de acceso basados en el usuario

Descubra qué aplicaciones de IA se están utilizando y qué usuarios las utilizan. Permita, bloquee o entrene el acceso por parte de usuarios o grupos de usuarios.

Controle las interacciones con la IA

Obtenga visibilidad, clasificación y moderación del contenido de las indicaciones. Deshabilite acciones y evite que datos confidenciales salgan de su organización.

Acceso Zero Trust a herramientas para desarrolladores de IA

Ofrezca a los desarrolladores acceso seguro a herramientas de IA. Proteja los datos y la infraestructura de IA a los que acceden estas herramientas con controles en línea.

Complete AI Security Is Here—Get a Front-Row Seat!

Explore new Zscaler innovations for AI discovery, red teaming automation, and runtime protection—built for governance and compliance.

Detalles de la solución

Asuma el control total del uso de la IA en su organización

Consiga visibilidad, control y protección completos en toda la IA con Zscaler. Descubra la IA oculta, controle el acceso y modere las indicaciones/respuestas para evitar la pérdida de datos y hacer cumplir la política de la empresa, y proteger la infraestructura de IA y el acceso a los datos desde herramientas de desarrollo impulsadas por la IA.

Detecte y clasifique miles de aplicaciones de IA, incluida la IA integrada en aplicaciones SaaS populares. Obtenga visibilidad en profundidad de los usuarios, departamentos, tendencias de aplicaciones y datos en riesgo con paneles de control interactivos.

Obtenga una visión clara de cómo los usuarios interactúan con sus aplicaciones, incluidos conocimientos profundos con extracción y clasificación de indicaicones/respuestas.

Utilice políticas flexibles para advertir, bloquear o aplicar el aislamiento del navegador, para obtener control total sobre las acciones de copiar y pegar dentro de las aplicaciones de IA.

Bloquee la pérdida de datos confidenciales en las indicaciones con una DLP en línea potente en más de 100 diccionarios de DLP como Código fuente, PII, PCI, PHI y más.

Detecte usos desviados de tema o que violen las políticas, incluidos los temas tóxicos, restringidos o competitivos, mediante el análisis de indicaciones y respuestas. Aplique controles en línea para mantener el uso seguro y conforme de la IA.

Proporcione a los desarrolladores acceso Zero Trust con controles en línea para entornos de desarrollo integrados de IA y herramientas que se conectan a la infraestructura de IA, para evitar la pérdida de datos y proteger contra las ciberamenazas.

Informe de seguridad de IA deThreatLabz

El volumen del tráfico de IA/ML de la empresa aumentó un 3,464.6 % interanual.

Conozca los otros hallazgos de nuestro análisis de más de 536 millones de transacciones.

Casos de uso

Ponga la IA a trabajar para su empresa

Defina y controle las herramientas de IA a las que sus usuarios pueden y no pueden acceder

Garantice que los datos confidenciales nunca se filtren a la IA con una solicitud o pregunta

Implemente controles granulares que admitan solicitudes, pero evite subidas de datos importantes en bloque.

Aplique un uso seguro y conforme de la GenAI para toda su fuerza de trabajo.